AI safety expert: 90% chance of existential threat

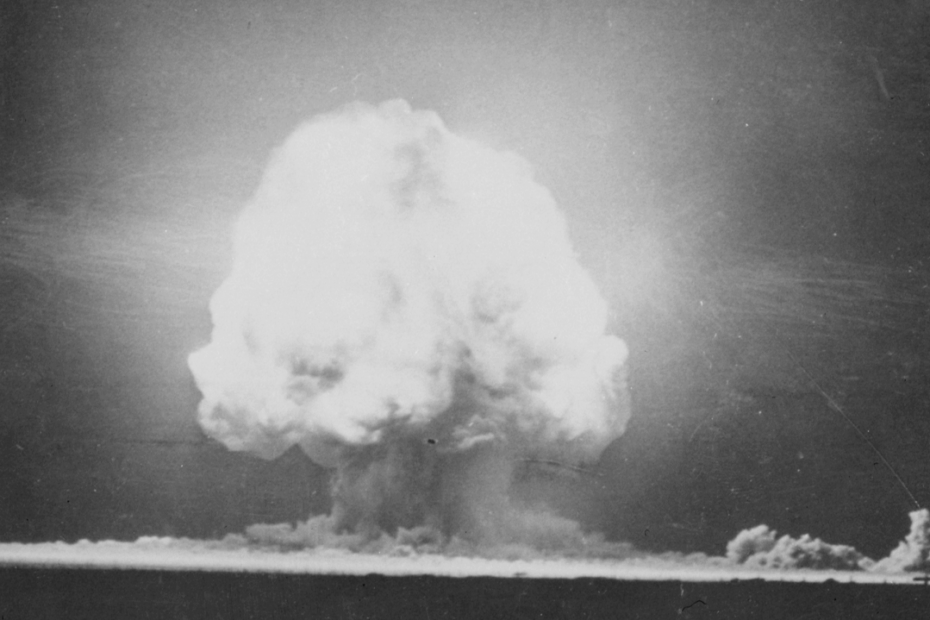

AI safety expert Max Tegmark warns that companies should replicate the safety calculations from Oppenheimer’s Trinity nuclear test before releasing super-intelligent AI. He calculates a 90% probability that advanced AI poses an existential threat to humanity.

The comparison? Oppenheimer’s team only proceeded after determining a “vanishingly small chance” of the bomb igniting Earth’s atmosphere.

Today’s AI race operates on the principle: “Move fast and break…everything.”

The four jobs safe from robot takeover

Elon Musk says that probably none of us will have jobs when AI and robotics take over. Bill Gates agrees that humans won’t be needed for “most things.” Geoffrey Hinton and Vinod Khosla predict massive labour replacement.

But some careers remain robot-proof: pop star, athlete, politician, and priest.

Time to look at your CV…which is next on your career ladder?

Time to become an AI prepper

Washington Post columnist Megan McArdle warns that it’s time to start preparing for AI’s impact: “Anyone with a job that involves words, data, or ideas needs to become an AI prepper.”

The advice? Start learning to work with AI now, because pretending it won’t affect your industry isn’t a strategy.

Look at it this way: you’re not being replaced — you’re being upgraded to “human premium edition.”

https://www.washingtonpost.com/opinions/2025/05/06/ai-jobs-prepper

Zuck’s vision: AI friends for everyone

Mark Zuckerberg wants you to have AI companions who know you better than yourself. The Facebook CEO envisions a future where AI friends outnumber humans, chatbots replace therapists, and your feed algorithm becomes your best buddy.

‘Cos nothing says healthy social life like paying subscription fees for friendships.

https://www.wsj.com/tech/ai/mark-zuckerberg-ai-digital-future-0bb04de7

Corporate rebranding: The “Ctrl+B” epidemic

Following Amazon’s brand refresh, 2025’s biggest rebrands (Walmart, OpenAI, Amazon) have essentially just hit “bold” on their logos. From PayPal to Reddit, everyone’s jumping on the thick-font bandwagon. The 2019 Slack redesign might be patient zero of this typography pandemic.

Expect underline italics next year and dingbats the year after.

https://www.fastcompany.com/91328583/why-every-company-is-hitting-ctrl-b-bold-logo-font

AI cheating crisis hits universities

ChatGPT has unravelled the entire academic project with students using AI for assignments.

Ethics professor Troy Jollimore warns: “Massive numbers of students are going to emerge from university with degrees, and into the workforce, who are essentially illiterate.”

On the bright side, they’ll fit right in with the rest of us who “read” the terms and conditions.

Amazon’s secret AI coding project “Kiro”

Amazon is developing an AI tool called “Kiro” that uses agents to streamline software coding. The multi-modal interface will auto-generate technical docs, flag issues, and offer optimisations.

Soon programmers can join baristas in the “replaced by robots” club – won’t somebody please think about the coffee?

https://www.businessinsider.com/amazon-kiro-project-ai-agents-software-coding-2025-5

Dead man delivers AI victim impact statement

In a world first, AI recreated deceased road rage victim Chris Pelkey to address his killer in an Arizona court. Using AI, Pelkey “spoke” from beyond the grave: “In another life, we probably could have been friends.”

Death is no longer the end, it seems. Now it’s a career pivot into the afterlife influencer space.

https://www.theguardian.com/us-news/2025/may/06/arizona-road-rage-victim-ai-chris-pelkey

AI hallucinations getting worse

“Reasoning” AI systems are producing more incorrect information despite being more powerful. A Cursor AI bot incorrectly announced policy changes that didn’t exist, leading to angry customers and cancelled accounts.

At least when humans lie, we have the courtesy to look guilty about it. Some of us. Sometimes.

https://www.nytimes.com/2025/05/05/technology/ai-hallucinations-chatgpt-google.html

Prompt engineering jobs disappearing

Prompt engineering is “quickly going extinct three years into the AI boom.” What was once a specialised role is now an expected basic skill. As one headline says, “AI is already eating its own.”

The machines are eliminating the people who tell them what to do – sounds like they’ve mastered office politics already.

https://www.fastcompany.com/91327911/prompt-engineering-going-extinct

OpenAI scales back corporate restructuring

OpenAI has dialled back its ambitious corporate reorganisation plans, raising questions about AI safety, investors’ potential profits, and an ongoing fight with Elon Musk. Sam Altman’s “Plan B” leaves many wondering what the future holds for the consequential AI developer.

Turns out saving humanity is harder than making a PowerPoint about saving humanity.

https://www.nytimes.com/2025/05/06/business/dealbook/altman-openai-plan-b.html